Oyster Serverless

Oyster Serverless is a cutting-edge, high-performance serverless computing platform designed to securely execute JavaScript (JS) and WebAssembly (WASM)…

Oyster Serverless is a cutting-edge, high-performance s

Summary

The Graph is an open-source query protocol for Ethereum and IPFS. Based on the widely popular GraphQL query language, it saves developers the hassle of setting up a full node and writing scripts to extract relevant information from raw data in the disk. Instead, the Graph indexes Ethereum chaindata to provide a developer-friendly query interface with an integrated Truebit-like protocol to incentivize correctness of responses.

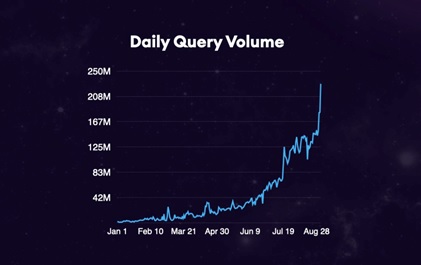

Over the past few months with the surge of DeFi dApps in the Ethereum ecosystem, the Graph has experienced parabolic growth in the number of requests processing as many as 220 million queries a day and 4 billion a month. It wouldn’t be an exaggeration to assert that despite a number of attempts to build a decentralized AWS, the Graph is the first cloud compute service in Web 3 to gain significant traction (ignoring Ethereum as a world computer, of course).

The perils of centralized cloud

The Graph’s limited focus to serve requests specific to the Ethereum blockchain and IPFS has ironically also been its greatest strength in gaining adoption amongst Web 3 developers. However, till date, it has been serving these requests via its hosted service that has been vulnerable to issues often associated with such single points of failures - downtimes, DDoS and limited scaling. Moreover, the response time of the Graph’s centralized endpoint is also quite high depending on the distance of the user to its server.

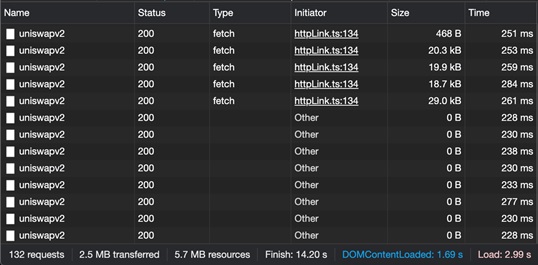

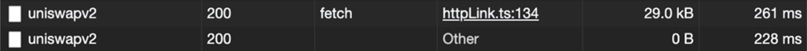

Take for instance the following host with an approximately 86 Mbps downlink loading https://uniswap.info which uses the Graph to fetch blockchain data.

It takes approximately 10 and a half seconds for all the fields in the page to fill up with 10s of requests made to the Graph’s server each taking around 250ms to return a response.

This is a metric we’ll allude to a few times throughout this article: a single request to the Graph’s centralized endpoint takes about 250ms. (It varies from 227ms for a request expecting no response to 329ms for a request with a 287B response. Around 7 requests took between 1.3 to 1.7 seconds to return a response.)

The repercussions

While users of dApps today realize that they are early adopters and are driven by their zeal to test new tech or farm yields which far offset any UX hiccups, the same would no longer hold true for the next 1 billion with Web 3 marching towards mass adoption.

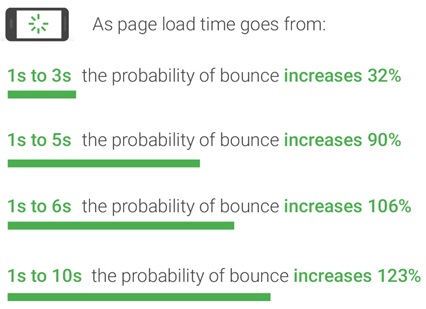

For better context, here are some concrete figures:

These statistics are far from encouraging for uniswap.info or any other dapp for that matter! On the other hand, speeding up slow websites is an age-old problem. Facebook, Amazon, Youtube and several other heavy websites load instantaneously irrespective of the location of the user. How do they do it? In order to find the solution, let’s first figure the critical path in the request-response cycle when querying the Graph which leads to the delay in the first place.

Digging deeper

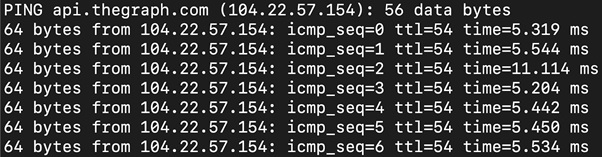

A simple ping to the Graph’s host seems to suggest that network latency is not a significant factor with the round trip being only about 5ms.

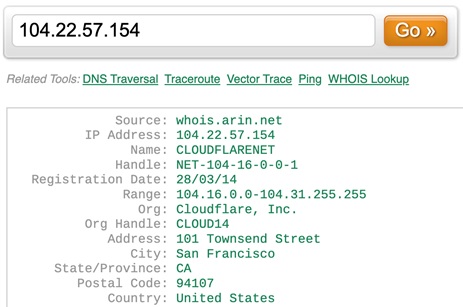

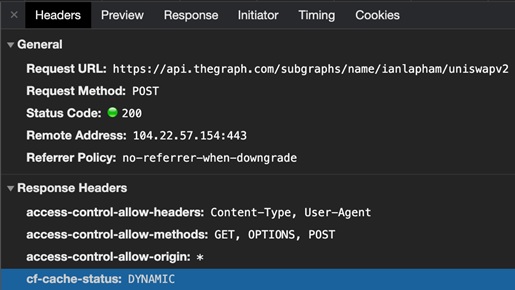

An IP lookup, however, reveals that api.thegraph.com like many other sites uses Cloudflare as a CDN service.

In fact, looking at the difference between the response times of OPTIONS (no query) and POST (with query) requests made to api.thegraph.com reveals that the processing time on the client is only 30ms indicating that the remaining 220ms is attributable to the latency between Cloudflare’s proxy server and the Graph’s origin server. This is possibly because the Graph operates servers in only one location in the globe.

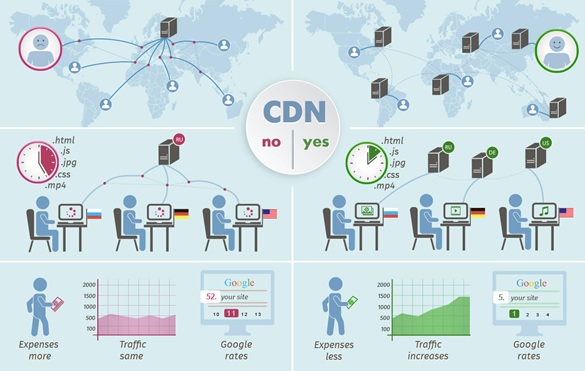

So now we know that network latency is what slows responses from Graph’s API. However, isn’t it the job of a CDN which in this case is Cloudflare to reduce such network latencies?

err.. a CDN? Is that how Web 2 is so fast?

For those unaware, a CDN service places caching servers at several locations across the globe to reduce geographical distance and thus response latency and load on the origin server.

In the absence of CDNs, web services resort to one of the following alternatives:

A CDN service caches common requests locally across regions, and power law of the Internet dictates that 20% of data accounts for 80% of all requests, preventing requests from ever having to hit the origin server while serving them with low-end but highly distributed machines instead.

A CDN is in fact the key to speeding up access to sites such as Facebook, Amazon and YouTube. It might be tempting to ask that if geographical distribution is what enables CDNs to improve web performance, wouldn’t the Graph’s decentralized network solve its latency issues.

Does a decentralized cloud serve as a CDN?

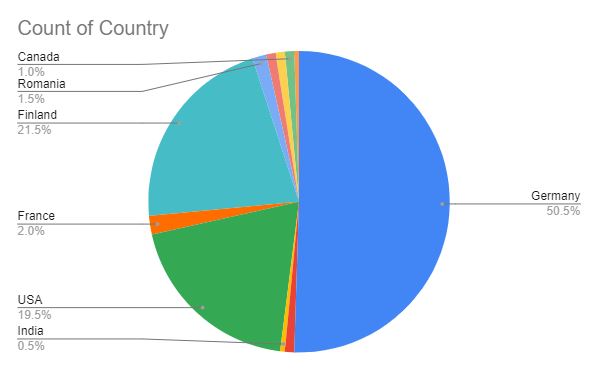

It's often assumed that a globally distributed decentralized network improves performance automatically. A very questionable assumption, however. Cost and intangible operational overhead automatically lead node operators to concentrate in places optimal to them.

Intensive disk access and compute operations together with costs limit the number of locations a Graph node can be placed increasing the latency to users. This becomes evident in none other than the Graph’s testnet itself.

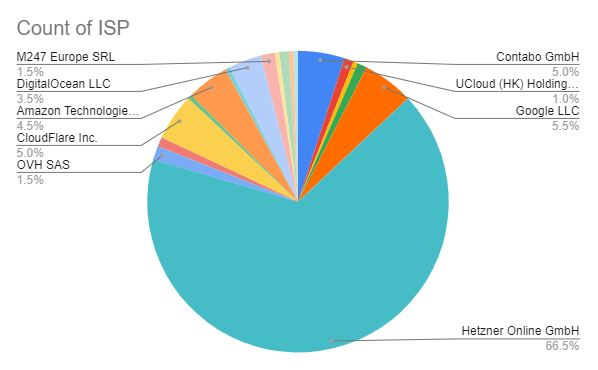

Of the approximately 200 nodes in the Graph’s testnet that were online and correctly responding to pings at the time of query, 90% are located in just 3 countries: US, Finland and Germany, and Germany alone accounts for 50% of the nodes. Moreover, 65% of the nodes use the same VPS provider, Hetzner Online.

But, we all know that perfect decentralization is vaguely defined; low cost and good performance are what most paying customers normally seek. So how are those metrics affected by the above findings?

Here’s the list of IPs in the Graph network: https://docs.google.com/spreadsheets/d/1qLb-D9sb0c9wvrIbMUMB63xnXh1DXmsPRfXh6UsSk28/edit?usp=sharing. Let’s ping each and check the RTTs using time curl -L '<URL>' -X OPTIONS -w "%{time_total}".

The results will vary based on the location of the host from where the query was made. Here are the results from the same host in Bengaluru, India from where the previous 250ms result for the Graph’s centralized endpoint was derived:

Mean: 677.5912ms

Median: 546.472ms

Standard Deviation: 498.6831ms

And.. that’s not all. Unlike the 250ms response earlier which returned results to a query, this query which takes more than double the time is only an OPTIONS request which doesn’t expect any data response and thus doesn’t include any disk fetch or processing time. Based on the server’s caching configurations and disk/CPU parameters, this response time is only expected to increase for meaningful requests.

| Client Location | RTT |

| Bengaluru | 296ms |

| US (West Coast) | 140ms |

| Singapore | 361ms |

10th percentile latency from different locations

It is thus clear that decentralization is far from a silver bullet. Things might get better but it is far from guaranteed that a decentralized network of Graph Indexers will either automatically lower the cost of operating the network (when including subsidies) or improve the performance for users.

CDN for APIs

So, why does uniswap.info take so long to load despite using Cloudflare, a popular and highly distributed CDN provider? The devil’s in the details. Observing the response headers closely reveals that Cloudflare is never used for caching query requests to the Graph (“cf-cache-status: DYNAMIC”). Instead, Cloudflare is asked to always proxy such requests to the origin server. This is a common strategy pursued by websites with dynamic content wherein Cloudflare is only used to prevent DDoS.

It is easy to cache static assets such as images and videos. Unsurprisingly, a large percentage of internet traffic is in fact media. CDNs are, thus, very effective in enhancing web and mobile performance.

But, traditional CDNs cannot cache frequently and unpredictably changing content like that delivered via API. They work on the basis of a time-to-live (TTL) associated with every asset after which the asset is purged and re-fetched from the origin. If the TTL is too small, the origin will be queried too often resulting in the very issue we began to solve. If the TTL is too large, stale and inaccurate results would be returned.

In ecommerce as is the case with blockchain dApps, information of interest is highly dynamic. Just as inventory and pricing change frequently during flash sales, so does blockchain data with every block height. Leaving such dynamic content at the origin server slows delivery and increases infrastructure costs with the service operator being doubly charged by both the hosting provider (or indexer) and the CDN which simply proxies the API call.

CDN for APIs require more advanced and customized engineering. As a matter of fact, very few traditional CDN companies even provide caching services for APIs and those who do charge a hefty premium.

Marlin Cache

Marlin Cache is based on the simple observation that at any point of time a small subset of dApps are more popular than the rest. For example, visits to Sushi or Rarible come in waves. The indexes serving such requests are updated only once per block interval (~12 secs). As a result, responses to frequent queries can be cached locally in various geographical regions reducing round-trip times and stress on the origin servers.

The Cache is event-driven. Nodes maintain subscriptions to popular indexers. The data held in the cache is updated with every block. Marlin Relay ensures that the delay in updates to every cache with the production of a block is capped at around 500 ms even if there’s only one full node supporting the Graph Network (one-way worst case latency from the Ethereum miner to the full node + the latency from the full node to the cache). The 500ms delay in updates can be reduced to 250ms if there are a few more full nodes spread across the globe.

Note that the 250ms delay mentioned here is different from the 250ms delay pointed out for uniswap.info earlier. Once each cache is updated based on the latest block, client requests would only take 5-10ms to fetch data. The delay here indicates that user screens would be stale by 250ms upon production of a new block (due to theoretical limits constraining any system). The Graph and other Ethereum full nodes could independently use the Marlin Relay to reduce this lag.

As any CDN routing queries to indexers via the Marlin Cache reduces response time for users from 230 milliseconds to 1.5 seconds down to 5-10 milliseconds for most cases when there’s a cache hit. Moreover, to ease integration Web 3 clients like many video players could code in a CDN url as well as a fallback url to an indexer so that no extra time is lost in case of a cache miss.

What you incentivize is what you are guaranteed

It might be tempting to ask whether the Cache is a Graph killer or Filecoin killer. The reality is none. The Graph incentivizes creation of subgraphs, their retrievability and correctness of responses. On the other hand, Filecoin or Arweave incentivize storage of content in the network. Rational actors work within the constraints defined by penalties while trying to maximize protocol provided incentives.

The Cache incentivizes participants to be globally distributed deep in geographies with its Proof of Proximity. The mechanism ensures that caches respond to requests within certain time limits else risk being downgraded over time. On the other hand, correctness of responses is still guaranteed as they are required to prove that the responses for any subgraph of a subgraph can be derived from the response to the corresponding subgraph provided by an indexer.

The incentives for the Cache encourage the operation of low-end nodes (with resource requirements much lower than what operating an Ethereum full node requires) distributed deep into different geographies with the only purpose of updating, storing and serving responses for popular API requests.

To summarize, some notable differences of the Cache from any indexer are:

(i) The Cache nodes require only a few megabytes of storage which can in most cases fit within the RAM as opposed to requiring full or archival nodes like Infura, the Graph or Pokt Network. Lower resource requirements enables much deeper and localized distribution of nodes reducing latency to clients.

(ii) The Cache supports contract events and balance, address, storage watchers which isn’t something an Indexer provides.

(iii) The Cache can use wider data sources and backends and is not restricted to any particular Indexer or query protocol.

(iv) The Cache provides cryptographic proofs of responses being derived from corresponding responses provided by Indexers. As a result, instead of requiring a fisherman scheme to detect malicious responses, clients can reject incorrect responses instantaneously. As a result, the Cache favours correctness as opposed to availability that the Graph’s fisherman scheme provides. In both cases, the client may choose to query multiple nodes for higher reliability.

Features

Marlin Cache enables faster response times with predefined custom logic at the edge. This ability directly or indirectly enables a number of cool features to enrich web 3.0 experiences:

(i) Caching: dApps can reduce the load on the origin and costs associated with querying the blockchain while also increasing responsiveness for users

(ii) Consistency: With global low latency connections, dApps and API providers can rest assured about not providing inconsistent responses across channels and users

(iii) Device Optimization: NFT marketplaces, games and in general IPFS based dApps can optimize content in real-time for users, for example, by serving lower resolution images that consume lesser bandwidth to mobile devices

(iv) Personalization: Tailoring content based on geography and history (for example, not displaying culturally inappropriate art in certain regions) could increase engagement and conversion in Web 3 marketplaces

(v) DDoS protection: Globally and deeply located cache servers can absorb requests much more efficiently while also being in a position to detect malicious attacks more easily

(vi) Real-time analytics: Artists, developers and marketplace owners can receive better insights on user activity and engagement

(vii) Experimentive business models: Instead of charging users, data based business models relying on harvesting user data, nevertheless in a privacy preserving fashion (for example, using differential privacy) become possible

Getting started

In short, Marlin Cache strives to provide Web 2-like performance to Web 3 dApps by caching data right next to users. If you are a developer interested in reducing the 250ms to 1.5 sec latencies incurred in blockchain API requests to less than 10ms, dive right into our docs to learn how you can use the Cache and join our discord group in case of queries.

Make sure you’re always up to date by following our official channels:

Twitter | Telegram Announcements | Telegram Chat | Discord | Website

Subscribe to our newsletter.